Posts

About Fullstack-Forum

0

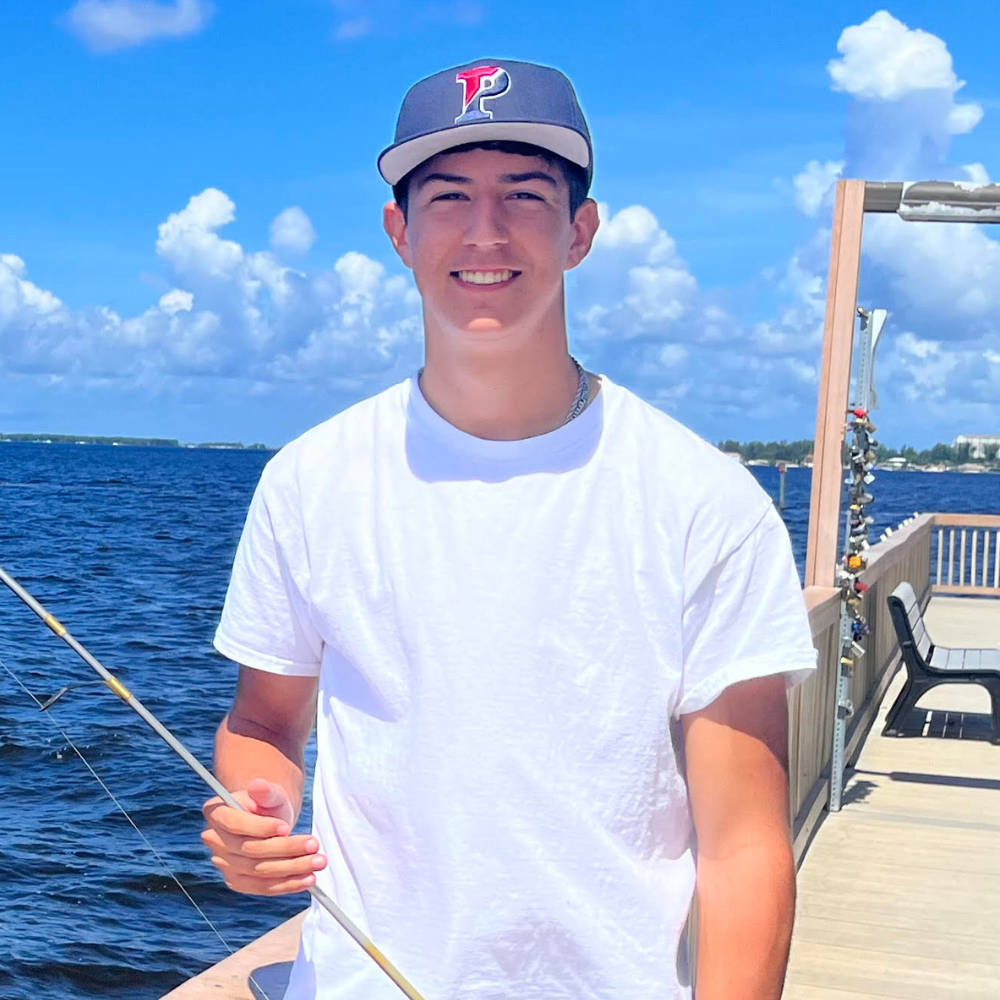

Hi! I'm Eric, and I made this site. This was my first time doing fullstack development, and it was a lot of fun (and at times quite painful) to learn each tool. I chose a stack with javascript because...

Another Post (A very long one...)

4

This post is from bob. There is a lot of text in this post, and you won't be able to read it all unless you click on this post's title. The preview will only be a short snippet of the entire text, and...

First Post

-2

This is the first post!

About Fullstack-Forum

This forum uses the following elements ↓

View the Github!

Typescript

Typescript is great! I'm using a javascript framework, so typescript is the primary language used throughout the entire project.

→ Client-Side...

HTML/CSS

HTML is an obvious must-have, and styling with plain css is robust and scalable if done properly.

Material-UI

Although not used extensively here, material-ui is a phenomenal library for professional icons.

Next.js

Next.js is built on top of React (Facebook's Javascript Framework) and enables both server-side rendering and static pages. I'm not sure if this is the best option at scale, but it certainly is a good one.

GraphQL

GraphQL is robust, scalable, and easy to use. It's very straight-forward.

URQL

URQL is a solid client-side query framework, but not as popular as apollo-client. They are both very similar in functionality and syntax. I may switch this project to apollo-client in the future.

→ Server-Side...

Apollo-Server

Apollo-server is a scalable api language for GraphQL, so it fits perfectly in this stack.

Express.js

With Express, there's minimal setup and hardly any maintenance once it's working. Unfortunately, it's not the best at scale. I should probably use Node.js (backed by Google) for future projects if scalability is a concern.

TypeORM

TypeORM has clear syntax with the option for high-level abstraction of queries or direct SQL commands. It's very easy to both implement and understand what is going on.

PostgreSQL

PostgreSQL is widely used and easy to vertically scale.

→ Deployment...

DigitalOcean

A user-friendly VPS that has a lot of documentation.

Dokku

Dokku is a self-hosted Platform as a Service (PaaS) that runs on top of Docker. It's similar to Heroku in that it is very user friendly and easy to configure, making application deployment much easier.

Vercel

Vercel handles deployment of the frontend. After initial setup, it is surprisingly easy to use.